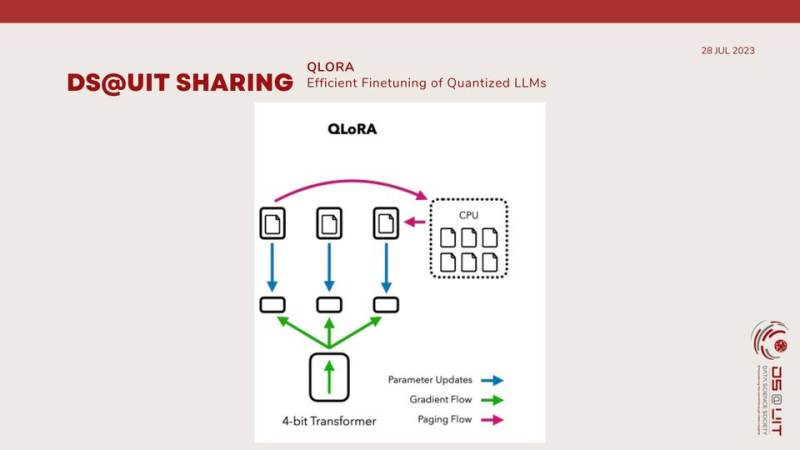

QLoRA (Quantized Low-Rank Adapters) builds upon the success of LoRA (Low-Rank Adaptation) by introducing quantization to further optimize the fine-tuning process of large language models (LLMs). Both techniques aim to address the challenges of fine-tuning LLMs, which often result in large, memory-intensive models.

QLoRA tackles the memory and computational challenges of fine-tuning by employing two key strategies:

Quantization: QLoRA quantizes the weights of the LLM to a lower bit precision, such as 4 or 8 bits. This reduces the memory footprint of the model without significantly impacting its performance.

Low-rank adaptation: Instead of directly fine-tuning the entire LLM, QLoRA introduces low-rank adapters, which are smaller matrices that capture the most important changes to the LLM's weights during fine-tuning. This approach significantly reduces the number of trainable parameters, leading to faster and more memory-efficient fine-tuning.

QLoRA offers several compelling advantages over traditional fine-tuning methods:

Reduced memory footprint: QLoRA's combination of quantization and low-rank adaptation substantially reduces the memory footprint of fine-tuned models, making them more suitable for deployment on devices with limited memory resources.

Faster fine-tuning: QLoRA's efficient optimization techniques significantly accelerate the fine-tuning process, allowing for faster model development and iteration.

Comparable performance: QLoRA models maintain comparable performance to fully fine-tuned models, demonstrating its effectiveness in preserving the original LLM's capabilities.

Orthogonal to other methods: QLoRA is orthogonal to many other parameter-efficient methods, allowing for further optimization and customization.

For more details, visit: https://www.facebook.com/dsociety.uit.ise/posts/pfbid02tXFMbpwDU2KXQhtwc...

Ha Bang - Media Collaborator, University of Information Technology

Nhat Hien - Translation Collaborator, University of Information Technology